Deep learning

Deep learning can be described as an artificial intelligence technique, which is a subset of machine learning. In other words, it is the training of artificial neural networks to replicate and understand complex patterns in data. The reason why deep learning has been the most significant breakthrough in AI is that it enabled machines to perform tasks that could otherwise only be proficient through human intelligence.

Find out what all the concern about deep learning in AI is all about:

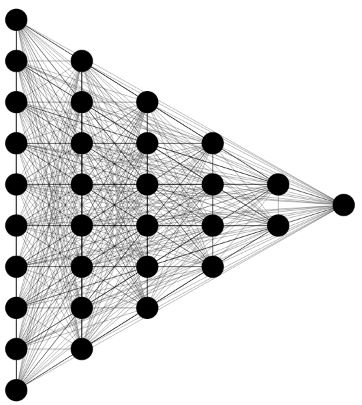

Thoughts and Basics Deep learning draws its inspiration from the structure and functioning of the human brain, particularly the neural networks constructed from a set of organized neurons. These artificial neural networks are built with deep layers of neurons, in which each processes input data into transformations, mostly with the application of a nonlinear activation function, and sends it to the next layer.

Artificial Neural Networks (ANNs):

Normally, an ANN has three kinds of layers:

1.Input Layer: Accepts raw data; for example, images and text.

2.Hidden layers: Process the input data to extract features from it. In deep learning, these can be many, hence "deep" learning.

3.Output layer: It is here that the actual final forecast or decision is made.

Neurons:

Each neuron of a neural network is a computational unit that takes weighted inputs, sums them up, applies an activation function, and passes the output to the next layer.

Activation Functions:

Non linearity is introduced to the network through activation functions, enabling complex patterns to be learned. ReLU, sigmoid, and tanh are among the more widely used activation functions.

2. Deep Networks Exercise

Deep learning is mainly trained using a method referred to as backpropagation, in which the artificial neural network adjusts its weights and biases according to the errors in the intended predictions.

Forward Transmission:

Data is sent from the input layer through to the output layer to produce a prediction.Loss Function: The prediction loss, being the difference between the predicted output and the actual target value, is calculated through a loss function—mean squared error for regression and cross entropy for classification.

Backpropagation:

Errors are propagated through the network to update weights, with gradient descent and techniques like Stochastic Gradient Descent (SGD) or Adam used to minimize the loss function.

3. Architectures of Deep Networks Various types of deep neural network architectures are designed for different AI tasks:

Feedforward Neural Networks (FNNs): The simplest kind of neural network, where information moves in one direction—from input to output.

Convolutional Neural Networks (CNNs):

Used for image related tasks such as classification, object discovery, and subdivision,with convolutional layers detecting spatial hierarchies like edges and textures.

Recurrent Neural Networks (RNNs):

Suitable for processing sequential data like time series or text, with loops that carry information over time steps. Long Short Term Memory (LSTM) and Gated Recurrent Units (GRUs) are popular for handling extensive term dependencies.

Generative Adversarial Networks (GANs):

GANs generate new data resembling a given dataset, like realistic images, consisting of two networks—one generating fake data, the other distinguishing real from fake.

Transformer Networks: Prominent in NLP tasks, transformers use self-attention mechanisms for sequence processing, excelling at tasks like conversion and summarization. Popular models include BERT and GPT.

4. Applications of Deep Learning in AI Deep learning is applied across many AI domains, driving advancements in:

Computer Idea:

Deep CNNs power tasks like image classification, object detection, and medical imaging analysis, with autonomous vehicles using deep learning for environmental recognition.

Natural Language Processing (NLP):

Transformers and GPT models drive language modeling, sentiment analysis, machine translation, and catboats, advancing AI's text generation capabilities.

Speech Appreciation:

Deep learning improves automatic speech recognition systems, such as Siri and Alexa.

Reinforcement Learning:

Combining deep learning with reinforcement learning has resulted in breakthroughs in game playing (e.g., Alpha Go) and robotics.

Generative Models:

GANs and vibrational auto encoders create realistic images, videos, and music, beyond tasks like enhancing low resolution images.

5. Challenges and Considerations Deep learning faces several challenges, including:

Data Requirements:

Deep learning methods rely heavily on large amounts of labeled data, which can be expensive and time consuming to acquire.

Computational Resources:

Training models requires significant computational power, often accelerated by GPUs and TPUs.

Overfitting:

Deep networks tend to overfit, performing well on training data but poorly on new data. Techniques like dropout, regularization, and data augmentation combat this.

Interpretability:

Often referred to as "black boxes," deep learning models' decision making processes are difficult to interpret. Explainable AI research seeks to make these models more transparent.

Ethical Considerations:

Deploying deep learning systems raises ethical concerns related to bias, fairness, and privacy, which need to be addressed to ensure AI benefits society.

6.The Future of Deep Learning Deep learning is rapidly evolving, with emerging trends including:

Self-Supervised Learning:

Reducing reliance on labeled data by learning representations from unlabeled data.

Neural Architecture Search (NAS):

Automating the search for the best neural network architecture for a task.

Quantum Machine Learning:

Combining quantum computing with deep learning to solve problems infeasible for classical computers.

Multimodal Learning:

AI that can process and understand data from multiple modalities, such as text, image, and speech.

Conclusion

Deep learning is a transformational technology in AI, allowing machines to achieve human like performance on complex tasks. It continues to push AI's boundaries, impacting industries such as healthcare, finance, and entertainment. As research progresses, deep learning will likely become even more central to future AI advancements.

Syed Ali Zulqurnain

sazsb services @ All right Reserved

Welcome to Sazab Moments Section